Visual Team Technology Techniques

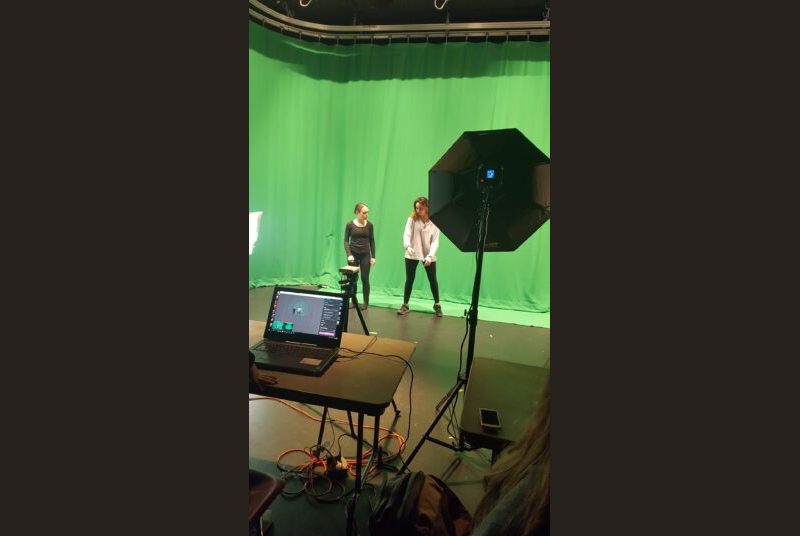

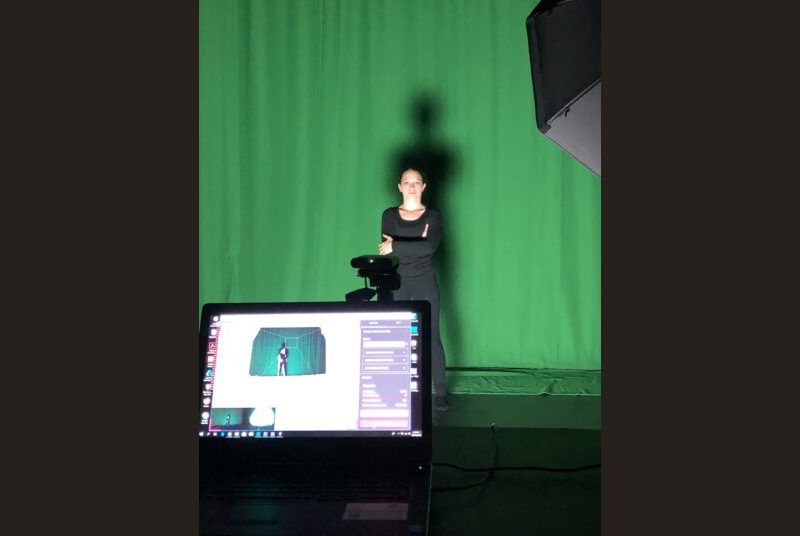

In preparation for the final show, the visual team has been storyboarding and creating assets for three different aesthetic phases of the opening act, Hitler’s Speech. By using a software named TouchDesigner, the team is able to feed in live video data, from a webcam or Xbox and Azure Kinects, to track body movements and use body gestures to interfere with and alter the visuals in real time. This gives the visuals the flexibility to react to the dancer’s movements and mimic the flow of their body gestures.

The visual team set up a studio session to record the exact gestures performed by the dancer in front of a green screen. The biggest difference between recording the dancer using a simple video camera and using the the Azure Kinect is that the Azure can record depth data, meaning the information can be stored as 3D data rather than a flat 2D video. This gives the visual team the opportunity to playback the recording at any time and feed in the depth data into TouchDesigner without needing the dancers to be physically available to test the visuals in real-time. Using the green screen helps clean up the silhouette of the dancer which makes sure all the body gestures are clearly recorded without any unwanted noise. Now that the full dance is recorded with depth information, the data can be fed into TouchDesigner to improve the quality of the visuals created in the software.